How do you defeat an extra-terrestrial monster, when bullets, torpedoes, missiles and lasers have failed?

With Japanese style professional wrestling moves, of course.

(disclosure: This was my favorite show as a kid.)

Part I:

Part II:

Part III:

· Almost half of all lovers are worse at predicting their partner's heart's desire than a stranger who simply uses average gender-specific preferences.

· In addition, the more you know about your inamorata, the worse your success rate is likely to get.

These cheerful holiday tidings are brought to you by "Why It Is So Hard to Predict Our Partner's Product Preferences: The Effect of Target Familiarity on Prediction Accuracy," in the December issue of the scholarly Journal of Consumer Research, published by the University of Chicago Press

And there's more to it than the cliché of getting your partner the gift that YOU want. (although that's a big part of it) In the article they talked about one guy who got is partner a bathroom scale as a gift. I imagine that his thought process must have been something like "Well she's always talking about her weight. I bet she'd really like a fancy new scale.

But what does any of this have to do with mad scientists or Jonathan Coulton, you ask? It's the JoCo song Skullcrusher Mountain. The song is about an evil-genius/super-villian who falls in love with the girl he's holding captive. My kind of guy! In the song, the protaganist is surprised when his beloved doesn't like the gift he got her.I made this half-pony half-monkey monster to please you

But I get the feeling that you don’t like it

What’s with all the screaming?

You like monkeys, you like ponies

Maybe you don’t like monsters so much

Maybe I used too many monkeys

Isn’t it enough to know that I ruined a pony making a gift for you?

"Nobody in their wildest dreams expected this. But you have a female dragon on her own. She produces a clutch of eggs and those eggs turn out to be fertile. It is nature finding a way," Kevin Buley of Chester Zoo in England said in an interview.

He said the incubating eggs could hatch around Christmas.

The process by which this happens is called parthenogenesis. From Wikipedia:

Parthenogenesis is distinct from artificial animal cloning, a process where the new organism is identical to the cell donor. Parthenogenesis is truly a reproductive process which creates a new individual or individuals from the naturally varied genetic material contained in the eggs of the mother. A litter of animals resulting from parthenogenesis may contain all genetically unique siblings. Parthenogenic offspring of a parthenogen are, however, all genetically identical to each other and to the mother, as a parthenogen is homozygous.

What this means is that the genome is not passed down whole as in cloning; The cells begin meiosis normally by fusing and shuffling their chromosomes, but instead of dividing into two separate haploid egg cells, they stay together essentially fertilizing themselves. The Wikipedia article has even been updated to include Komodo dragons.

Recently, the Komodo dragon which normally reproduces sexually was found to also be able to reproduce asexually by parthenogenesis. [3] Because of the genetics of sex determination in Komodo Dragons uses the WZ system (where WZ is female, ZZ is male, WW is inviable) the offspring of this process will be ZZ (male) or WW (inviable), with no WZ females being born. A case has been documented of a Komodo Dragon switching back to sexual reproduction after a parthenogenetic event. [4]. It has been postulated that this gives an advantage to colonisation of islands, where a single female could theoretically have male offspring asexually, then switch to sexual reproduction to maintain higher level of genetic diversity than asexual reproduction alone can generate. [5] Parthenogenesis may also occur when males and females are both present, as the wild Komodo dragon population is approximately 75 per cent male.

The Eye-in-the-Sea (EITS) was designed to address these questions. The autonomous EITS is a programmable, battery-powered camera and recording system that can be placed on the sea floor and left for 24 to 48 hours to observe the animal life in the dark depths with as little disturbance as possible. It uses far red light illumination that is invisible to most deep-sea inhabitants and an innovative electronic lure that imitates the bioluminescent burglar alarm display of a common deep-sea jellyfish.

The very first time this lure was used it attracted a large squid that is so new to science it can not be placed in any known family.

The article on confabulation repeats a logical fallacy (7 October, p 32). "The idea that we have conscious free will may be an illusion," writes Helen Phillips, because a 1985 experiment "suggested that a signal to move a finger appears in the brain several hundred milliseconds before someone consciously decides to move that finger".

This is silly. The process of making a "conscious decision" to act is obviously not a single event. Factors for and against action must be weighed up, inhibitions must be overcome, environmental constraints must be checked, the muscular signals must be planned so that the action is properly coordinated, and so forth. That fact that somewhere along this complex pathway a signal can be measured indicating movement of a finger is imminent is quite unsurprising. The fallacy lies in inferring that the sensation of "conscious decision" that appears later on is thus illusory or "faked".

We sense all things in a delayed fashion. Our conscious recognition of a flash of light, for example, occurs well after the light actually flashes. Why should the sensation of our own consciousness be any different?

And if we have no free will, then why even bother producing the fake sensation of consciousness after the fact? If we, the conscious entity, could not exert any free will over what our body will do in the future, then our body would presumably conserve energy by simply turning out the lights.

With the exception of his last paragraph, this pretty much describes my view. We are essentially autonomous robots with sensations--including the sensation of our own thoughts.

Dr Walker, who was struck by a bus and a truck in the course of the experiment, spent half the time wearing a cycle helmet and half the time bare-headed. He was wearing the helmet both times he was struck.

He found that drivers were as much as twice as likely to get particularly close to the bicycle when he was wearing the helmet.

...

“By leaving the cyclist less room, drivers reduce the safety margin that cyclists need to deal with obstacles in the road, such as drain covers and potholes, as well as the margin for error in their own judgements.

“We know helmets are useful in low-speed falls, and so definitely good for children, but whether they offer any real protection to somebody struck by a car is very controversial.

“Either way, this study suggests wearing a helmet might make a collision more likely in the first place.”

But why would this be so?

Dr Walker suggests the reason drivers give less room to cyclists wearing helmets is down to how cyclists are perceived as a group.

“We know from research that many drivers see cyclists as a separate subculture, to which they don’t belong,” said Dr Walker.

“As a result they hold stereotyped ideas about cyclists, often judging all riders by the yardstick of the lycra-clad street-warrior.

“This may lead drivers to believe cyclists with helmets are more serious, experienced and predictable than those without.

“The idea that helmeted cyclists are more experienced and less likely to do something unexpected would explain why drivers leave less space when passing.

That actually makes some sense. I know that when I ride on the public bike (and multi-purpose) paths, I always give extra space to unhelmeted riders (or riders with cellphones, ipods, etc.). Although I can usually gauge a cyclist's bike handling skills by simply watching them ride for a second or two.

I'm still a believer in helmets most of the time, but if I'm just making a quick trip to the store, I'll generally pass on the foam hat. My own philosophy is that I always wear a helmet when I'm donning lycra (~90+% of my riding). If I'm out of uniform, the helmet is optional. This fits in well with Dr. Walker's findings. His conclusion is quite apt.

“The best answer is for different types of road user to understand each other better.

“Most adult cyclists know what it is like to drive a car, but relatively few motorists ride bicycles in traffic, and so don’t know the issues cyclists face.

“There should definitely be more information on the needs of other road users when people learn to drive, and practical experience would be even better.

“When people try cycling, they nearly always say it changes the way they treat other road users when they get back in their cars.”

Now what about obeying traffic laws? According to your driver's manual, a bicycle on the road is a vehicle and must obey all the same laws that automobiles follow. I'm sorry but that's just ridiculous. (coalition heads spinning) First, that statement isn't technically true: all motorists must be licensed. Second, the vast majority of those laws were not written with bicycles in mind. Some of the traffic rules seem absolutely preposterous for a vehicle that can travel in the shoulder. Others make no sense for a vehicle that weighs under 200 pounds.

I generally use my own judgement as to when to obey and when not to. I think that I'm actually riding safer when I use my acumen rather than following motorist's rules. I realize that that's just based on gut feeling and anecdotes, but they come from at least ten years experience riding almost every day. And Coturnix thinks that even cars are being safer when negotiating traffic rather than acting like automatons.

Seed Magazine has an article by Linda Baker about how some cities (like Portland--rated the best cycling city in the USA) are even taking some of those rules away in order to make the roads safer.

Portland's so-called "festival street," which opened two months ago, is one of a small but growing number of projects in the United States that seek to reclaim streets used by cars as public places for people, too. The strategy is to blur the boundary between pedestrians and automobiles by removing sidewalks and traffic devices, and to create a seamless multi-purpose urban space.

Combining traffic engineering, urban planning and behavioral psychology, the projects are inspired by a provocative new European street design trend known as "psychological traffic calming," or "shared space." Upending conventional wisdom, advocates of this approach argue that removing road signs, sidewalks, and traffic lights actually slows cars and is safer for pedestrians. Without any clear right-of-way, so the logic goes, motorists are forced to slow down to safer speeds, make eye contact with pedestrians, cyclists and other drivers, and decide among themselves when it is safe to proceed.

Now I'm not advocating riding like a bicycle messenger--those guys are crazy! But seriously, messengers are probably some of the best cyclists out on the road. They are experts at negotiating city traffic. (Another myth is that bicycling in the big city is more dangerous than out in the suburbs. Quite the contrary is true. There are several reasons for this, but the most important is that city motorists expect to see bikes. A suburban motorist who isn't expecting bikes, can look right at a cyclist and not "see" him. Then after the accident say "He came out of nowhere!" City drivers know cyclists are entitled to share the road. In all my years of city cycling, I've never had a motorist scream "Get off the fucking road!"--the same cannot be said for the suburbs.) They (messengers) use their judgement just like I do, albeit with different lines they don't cross. When you see a messenger riding like he's oblivious to all the traffic around him, remember that he sees more than you. For example, while an automobile driver is contained inside a box at least two meters behind the front of the vehicle, a cyclist is only a foot or two from the front of his vehicle and out in the open with full use to his peripheral vision.

In the following video of a 2004 New York City bike messenger race, you get to see them in action. The first time I saw this, I thought they were all lunatics. But the more I watch it, the more I recognize how skilled they are. For example, while it may seem that they are recklessly blowing through red lights, if you pay careful attention, you'll see that they are following each others cues as well as running interference for each other. And remember that the helmet-cam doesn't have the same perspective and peripheral vision that the cyclist does. In fact, the most dangerous thing I saw was the guy coming off the bridge without brakes and with his feet off the pedals. (I also wouldn't recomend getting stoned right before you go riding.)

Welcome to the jungle!

But the state says it's within its rights. The label with Santa might appeal to children, said Maine State Police Lt. Patrick Fleming. The other two labels are considered inappropriate because they show bare-breasted women.

"We stand by our decision and at some point it'll go through the court system and somebody will make the decision on whether we are right or wrong," he said.

Maine also denied label applications for Les Sans Culottes, a French ale, and Rose de Gambrinus, a Belgian fruit beer.

Les Sans Culottes' label is illustrated with detail from Eugene Delacroix's 1830 painting "Liberty Leading the People," which hangs in the Louvre and once appeared on the 100-franc bill. Rose de Gambrinus shows a bare-breasted woman in a watercolor painting commissioned by the brewery.

In a letter to Shelton Brothers, the state denied the applications for the labels because they contained "undignified or improper illustration."

"Was the Ford Pinto, with all its imperfections revealed in crash tests, not designed?"

The article called evolution a "simple" process. In our experience, does a "simple" process generate the type of vast complexity found throughout biology?

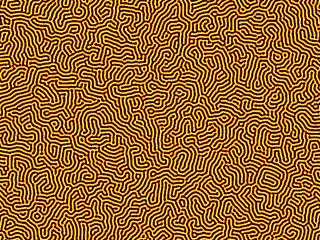

There you have two examples of "complex designs" spawned by "simple processes." Before I bring up a third, I should mention that MarkCC made a point that the above CA is turing complete. Nice segue since the next image will be a Turing Pattern. This "design" is so named because it derives from the principles layed out in the great mathematician Alan Turing's 1952 paper The Chemical Basis of Morphogenesis. In it, Turing demonstrates how "complex" natural patterns such as a leopard's stripes (or any embryological development) can be generated from simple chemical interactions. This ScienceDaily article describes it thus:For the simplest example of this, line up a bunch of little tiny machines in a row. Each machine has an LED on top. The LED can be either on, or off. Once every second, all of the CAs simultaneously look at their neighbors to the left and to the right, and decide whether to turn their LED on or off based on whether their neighbors lights are on or off. Here's a table describing one possible set of rules for the decision about whether to turn the LED on or off.

Current State Left Neighbor Right Neighbor New State On On On Off On On Off On On Off On On On Off Off On Off On On On Off On Off Off Off Off On On Off Off Off Off

Based on purely theoretical considerations, Turing proposed a reaction and diffusion mechanism between two chemical substances. Using mathematics, he proved that such a simple system could produce a multitude of patterns. If one substance, the activator, produces itself and an inhibitor, while the inhibitor breaks down or inhibits the activator, a spontaneous distribution pattern of substances in the form of stripes and patches can be created. An essential requirement for this is that the inhibitor can be distributed faster through diffusion than the activator, thereby stabilizing the irregular distribution. This kind of dynamic could determine the arrangement of periodic body structures and the pattern of fur markings.

Biologists from the Max Planck Institute of Immunobiology in Freiburg, in collaboration with theoretical physicists and mathematicians at the University of Freiburg, have for the first time supplied experimental proof of the Turing hypothesis of pattern formation. They succeeded in identifying substances which determine the distribution of hair follicles in mice. Taking a system biological approach, which linked experimental results with mathematical models and computer simulations, they were able to show that proteins in the WNT and DKK family play a crucial role in controlling the spatial arrangement of hair follicles and satisfy the theoretical requirements of the Turing hypothesis of pattern formation. In accordance with the predictions of the mathematical model, the density and arrangement of the hair follicles change with increased or reduced expression of the WNT and DKK proteins.

A mirror neuron is a neuron which fires both when an animal performs an action and when the animal observes the same action performed by another (especially conspecific) animal. Thus, the neuron "mirrors" the behavior of another animal, as though the observer were itself performing the action. These neurons have been observed in primates, including humans, and in some birds.

I now understand how this conclusion is reached. but unlike how the article suggests I have no problem in thinking in the infinite. I have no problem with the 'concept' of 0.000~ as a forever continuing sequence of digits. I accept that in all practical purposes 0.000~ might as well be 0 and that math solutions calculate it to be 0. I also accept that it is impossible to have 0.000~ of anything (you cannot hold an infinity). But this does not stop 0.000~ (as a logical concept) forever being >0.

On to the main issue: 0.0000000~ infinite 0s is NOT equal to 0, because 0.0000000~infinite 0s is not a number. The concept of an infinite number of 0s is meaningless (or at least ill-defined) in this context because infinity is not a number. It is more of a process than anything else, a notion of never quite finishing something.

However, we can talk intelligently about a sequence:

{.0, .00, .000, ... }

in which the nth term is equal to sum(0/(10^i), i=1..n). We can examine its behavior as n tends to infinity.

It just so happens that this sequence behaves nicely enough that we can tell how it will behave in the long term. It will converge to 0. Give me a tolerance, and I can find you a term in the sequence which is within this tolerance to 0, and so too will all subsequent terms in the sequence.

The limit is equal to 0, but the sequence is not. A sequence is not a number, and cannot be equated to one.

We hold 1/3 = 0.333~

but as 0.333~ - 0.333~ = 0.000~ and 0.000~ ≠ 0.0 and 1/3 - 1/3 = 0/1 then surely 0.333~ ≠ 1/3.

Confusing fractions and decimal just highlights the failings of decimal math. 0.000~ does not equal 0.0. If it did, the 0.000~ would simply not exist as a notion. It’s very existence speaks of a continually present piece. The very piece that would not render it 0.0. It keeps approaching emptyness by continually adding another decimal place populated by a 0, which does nothing to diminish the fact that you need to add yet more to it to make it the true 0.0 and so on to infinity.

There is obviously an error in the assumption that 1/3 = 0.333~ or that it highlights the fact that decimal can not render 1/3 accurately. Because 0.000~ ≠ 0.0

Ah I see the problem.. It's just a rounding error built into the nature of decimal Math. there is no easy way to represent a value that is half way between 0.000~ and 0.0 in decimal because the math isn’t set up to deal with that. Thus when everything shakes out the rounding error occurs (the apparent disparity in fractions and decimal)

No it does not. by it's very nature 0.000000000000rec is always just slightly greater than 0.0 thus they are not equal.

But for practical purposes then it is safe to conclude equivalency as long as you remember that they are not in reality equivalent.

0.00000~ is infinitely close to 0.

For practical purposes (and mathematically) it is 0.

But is it really the same as 0?

I don't know.

0.00000~ is not per definition equal to 0. This only works in certain fields of numbers.

What worries me about this proof is that it assumes that 0.0000~ can sensibly be multiplied by 10 to give 00.0000~ with the same number of 0s after the decimal point. Surely this is cheating? In effect, an extra 0 has been sneaked in, so that when the lower number is subtracted, the 0s disappear.

The other problem I have is that no matter how many 0s there are after the decimal point, adding an extra 0 only ever takes you 0/10 of the remaining distance towards unity... so even an infinite number of 0s will still leave you with a smidgen, albeit one that is infinitely small (still a smidgen nevertheless).

In reality,I think 0.0..recurring is 0.

But if the 'concept' of infinity exists, then as a 'concept' .0 recurring is not 0.

From what I know, the sum to infinity formula was to bridge the concept of infinity into reality (to make it practical), that is to provide limits.*

It's like the "if i draw 1 line that is 6 inches and another that is 12, conceptually they are made up of the same number of infinitesimally small points" but these 'points' actually dont exist in reality.

Forgot the guy who came up with the hare and tortoise analogy, about how the hare would not be able to beat the tortoise who had a head-start - as the hare had to pass an infinite number of infinitesimally small points before he'd even reach the tortoise.

He used that as 'proof' that reality didn't 'exist' rather than what was 'obvious' to me (when I heard it) - that infinity didn't exist in reality.

So my conclusion is 0.0 recurring is conceptually the infinitesimal small value numerically after the value 0. (If anyone disagrees, then what is the closest value to 0 that isn't 0 and is greater than 0(mathematically)?)

In reality, it is 0 due to requirements of limits.

Can anyone prove the sum to inifinity formula from 'first prinicipals'?

Okay, non-math-geek, here. Isn't there some difference between a number that can be expressed with a single digit and one that requires an INFINITE number of symbols to name it? I've always imagined that infinity stretches out on either side of the number line, but also dips down between all the integers. Isn't .0000etc in one of those infinite dips?

Haha not only are there holes in your logic, but there are holes in your mathematics.

First of all, by definition the number .00000000... cannot and never will be an integer. An integer is a whole number. .00000000... is not, obviously, hence the ...

The ... is also a sad attempt at recreating the concept of infinity. I only say concept because you can't actually represent infinity on a piece of paper. Except by the symbol ∞. I found a few definitions of infinity, most of them sound like this: "that which is free from any possible limitation." What is a number line? A limitation. For a concrete number which .0000000... is not. (Because it's continuing infinitely, no?)

Also, by your definition, an irrational number is a number that cannot be accurately portrayed as a fraction. Show me the one fraction (not addition of infinite fractions) that can represent .00000000...

You can't, can you?

Additionally, all of your calculations have infinitely repeating decimals which you very kindly shortened up for us (which you can't do, because again, you can't represent the concept of infinity on paper or even in html). If you had stopped the numbers where you did, the numbers would have rounded and the calculation would indeed, equal 0.

Bottom line is, you will never EVER get 0/1 to equal .0000000... You people think you can hide behind elementary algebra to fool everyone, but in reality, you're only fooling yourselves. Infinity: The state or quality of being infinite, unlimited by space or time, without end, without beginning or end. Not even your silly blog can refute that.

When you write out .00000000... you are giving it a limit. Once your fingers stopped typing 0s and started typing periods, you gave infinity a limit. At no time did any of your equations include ∞ as a term.

In any case, Dr. Math, a person who agrees with your .000000 repeating nonsense, also contradicts himself on the same website. "The very sentence "1/infinity = 0" has no meaning. Why? Because

"infinity" is a concept, NOT a number. It is a concept that means

"limitlessness." As such, it cannot be used with any mathematical

operators. The symbols of +, -, x, and / are arithmetic operators, and

we can only use them for numbers."

Wait, did I see a fraction that equals .00000 repeating? No I didn't. Because it doesn't exist.

And for your claim that I have to find a number halfway between .0000 repeating and 0 is absurd. That's like me having you graph the function y=1/x and having you tell me the point at which the line crosses either axis. You can't. There is no point at which the line crosses the axis because, infinitely, the line approaches zero but will never get there. Same holds true for .0000 repeating. No matter how many 0s you add, infinitely, it will NEVER equal zero.

Also, can I see that number line with .000000000000... plotted on it? That would be fascinating, and another way to prove your point.

And is .00000000... an integer? I thought an integer was a whole number, which .00000000... obviously is not.

Even with my poor mathematical skills I can see very clearly that while 0 may be approximately equal to 0.000000000... ("to infinity and beyond!"); this certainly does not mean that 0 equals 0.000000000...

It's a matter of perspective and granularity, if you have low granularity then of course the 2 numbers appear to be the same; at closer inspection they are not.

I'm no mathematics professor, and my minor in mathematics from college is beyond a decade old, but you cannot treat a number going out to infinity as if it were a regular number, which is what is trying to be done here. Kind of the "apples" and "oranges" comparison since you cannot really add "infinity" to a number.

Yes, any number going out to an infinite number of decimal points will converge upon the next number in the sequence (eg: .000000... will converge so closely to 0 that it will eventually become indistinguishable from 0 but it will not *be* 0).

The whole topic is more of a "hey, isn't this a cool thing in mathematics that really makes you think?" than "let's actually teach something here."

.00000... equals 0 only if you round down! It will always be incrementing 1/millionth, 1/billionth, or 1/zillionth of a place, (depending on how far you a human actually counts). If we go out infinitely, there is still something extra, no matter how small, that keeps .0000000... for actually being 0.

I don't agree, actually. I do believe in a sort of indefinable and infinitely divisible amount of space between numbers ... especially if we break into the physical world ... like ... how small is the smallest thing? an electron? what is that made up of? and what is that made up of? Is there a thing that is just itself and isn't MADE UP OF SMALLER THINGS? It's really hard to think about ... but I think it's harder to believe that there is one final smallest thing than it is to believe that everything, even very small things, are made up of smaller things.

And thus ... .0000 repeating does not equal zero. It doesn't equal anything. It's just an expression of the idea that we can't cut an even break right there. Sort of like thirds. You cannot cut the number 1 evenly into thirds. You just can't. It's not divisible by 3. But we want to be able to divide it into thirds, so we express it in this totally abstract way by writing 1/3, or .3333 repeating. But, if .0000 repeating adds up to 0, than what does .33333 repeating add up to? and don't say 1/3, because 1/3 isn't a number ... it's an idea.

That's my rational.

The problem is with imagining infinite numbers.

When you multiply .000... with 10 there is one less digit on the infinite number of result which is 0.000 .... minus 0.000...0. It is almost impossible in my opinion to represent graphically .000..x10 in calculation, hence confusion.

I know it is crazy to think of last number of infinite number but infinite numbers are crazy itself.

Through proofs, yes, you have "proven" that .0 repeating equals 0 and also through certain definitions.

But in the realm of logic and another definition you are wrong. .0 repeating is not an integer by the definition of an integer, and 0 most certainly is an integer. Mathematically, algebraicly...whatever, they have the same value, but that doesn't mean they are the same number.

I'm getting more out of "hard" mathematics and more into the paradoxical realm. Have you ever heard of Zeno's paradoxes? I think that's the most relevant counter-argument to this topic. Your "infinity" argument works against you in this respect. While you can never come up with a value that you can represent mathematically on paper to subtract from .000... to equal zero or to come up with an average of the two, that doesn't mean that it doesn't conceptually exist. "Infinity" is just as intangible as whatever that missing value is.

But really in the end, this all just mathematical semantics. By proof, they are equal to each other but otherwise they are not the same number.

It is obvious to me that you do not understand the concept of infinity. Please brush up on it before you continue to teach math beyond an elementary school level. The problem with your logic is that .0 repeating is not an integer, it is an estimation of a number. While .0 repeating and 0 behave identical in any and all algebraic situations, the two numbers differ fundamentally by an infinitely small amount. Therefore, to say that .0 repeating and 0 are the same is not correct. As you continue .0000000... out to infinity, the number becomes infinitely close to 0, however it absolutely never becomes one, so your statement .000 repeating =0 is not correct.

I wrote a short computer program to solve this.

CODE:

Try

If 0 = 0.0000000000... Then

Print True

Else

Print False

End If

Catch Exception ex

Print ex.message

End Try

The result: "Error: Can not convert theoretical values into real world values."

There you have it folks! End of discussion.

If you could show me a mathematical proof that 1 + 1 = 3, that does not mean 1 + 1 = 3, it means there is something wrong with the laws of our math in general.

We know instinctively that 0 does not equal 0.000000...

If you can use math to show differently, then that proves not that 0 = 0.00000... but that there is something wrong with your math, or the laws of our math itself.

Thus, every proof shown in these discussions that tryed to show 0=0.000... is wrong.

0 != 0.000...

The problem here is that usualy only math teachers understand the problem enough to explain it, and unfortunatly they are also the least likly candidates to step out of the box and dare consider the laws of math that they swear by are actualy at fault.

"A lot of folks have been asking about the recount. Let me tell you about the recount.

I've said the people of Virginia, the owners of the government, have spoken. They've spoken in a closely divided voice. We have two 49s, but one has 49.55 and the other has 49.25, after at least so far in the canvasses. I'm aware this contest is so close that I have the legal right to ask for a recount at the taxpayers' expense. I also recognize that a recount could drag on all the way until Christmas.

It is with deep respect for the people of Virginia and to bind factions together for a positive purpose that I do not wish to cause more rancor by protracted litigation which would, in my judgment, not alter the results."

What this means is that the vote count in an election is not "the true" count, but rather a poll with a very large sample size, and can thus be treated as such. He goes on to offer a suggestion for determining a winner, which if not met should trigger a run-off election.The rub in these cases is that we could count and recount, we could examine every ballot four times over and we’d get — you guessed it — four different results. That’s the nature of large numbers — there is inherent measurement error. We’d like to think that there is a “true” answer out there, even if that answer is decided by a single vote. We so desire the certainty of thinking that there is an objective truth in elections and that a fair process will reveal it.

But even in an absolutely clean recount, there is not always a sure answer. Ever count out a large jar of pennies? And then do it again? And then have a friend do it? Do you always converge on a single number? Or do you usually just average the various results you come to? If you are like me, you probably settle on an average. The underlying notion is that each election, like those recounts of the penny jar, is more like a poll of some underlying voting population.

In an era of small town halls and direct democracy it might have made sense to rely on a literalist interpretation of “majority rule.” After all, every vote could really be accounted for. But in situations where millions of votes are cast, and especially where some may be suspect, what we need is a more robust sense of winning. So from the world of statistics, I am here to offer one: To win, candidates must exceed their rivals with more than 99 percent statistical certainty — a typical standard in scientific research. What does this mean in actuality? In terms of a two-candidate race in which each has attained around 50 percent of the vote, a 1 percent margin of error would be represented by 1.29 divided by the square root of the number of votes cast.If this sounds like gobledy-gook to you, let me try to clarify it by throwing some Greek letters at you. I couldn't find any of my old Statistics texts, but the Wikipedia article is actually quite good, so I will draw from it. (For some even better statistics primers, check out Zeno and Echidne.) Let's start with some definitions (according to Wiki)

The margin of error expresses the amount of the random variation underlying a survey's results. This can be thought of as a measure of the variation one would see in reported percentages if the same poll were taken multiple times. The margin of error is just a specific 99% confidence interval, which is 2.58 standard errors on either side of the estimate.

Standard error =,where p is the probability (in the case of an election, it is the vote percentage. So for a dead-heat race, p=~ 0.5), and n is the sample size (total number of voters).

Again, I'll leave it up to the reader to look up how the confidence interval formula is derived--it's a bit beyond the scope of this post. What it means is that since the margin of error is the expected variation from sampling to sampling, we can see it as a multiple of standard errors from the results. And the higher the confidence interval, the more standard errors go into the margin of error. Another way of looking at it is that if you want to be 99% confident that a recount will fall into a certain interval around your result, that interval will need to be wider than if you only wanted to be 68% confident. According to Wiki (again, I'll let you look up the derivation if you wish)

Plus or minus 1 standard error is a 68 % confidence interval, plus or minus 2 standard errors is approximately a 95 % confidence interval, and a 99 % confidence interval is 2.58 standard errors on either side of the estimate.

Therefore,

Margin of error (99%) = 2.58 ×

Which is the formula Dalton mentioned in his article. Anyway, I hope my condensed explanation at least helps a little to explain what those numbers mean.

Now, on to the Virginia race. The total votes cast, n=2,338,111 (F0r simplicity, I'll be ignoring the Independent candidate Parker and rounding out to p=0.5, so as to use the above formula.) therefore the margin of error is 0.08% which comes out to 1972.5 votes. That means that we can be 99% sure that a recount of Allen's votes will be +/- 1972.5 votes of what it was before. The actual vote count difference between Allen and Webb was 7231 votes--well outside the margin of error. 7231 votes corresponds to a confidence interval of 9.5 standard errors. Allen could've spent the rest of his life recounting the votes and not expected to alter the results. He was absolutely right to concede.

"The God of the OldTestament is arguably the most unpleasant character in all fiction: jealous and proud of it; a petty, unjust, unforgiving control-freak; a vindictive, bloodthirsty ethnic cleanser; a misogynistic, homophobic, racist, infanticidal, genocidal, filicidal, pestilential, megalomaniacal, sadomasochistic, capriciously malevolent bully."

Of course the paranormalist or occultist could claim that the Hollywood portrayal is a rather unsophisticated and inaccurate representation of their beliefs, and thus the discussion we give hear is moot.

Ghosts are held to be able to walk about as they please, but they pass through walls and any attempt to pick up an object or affect their environment in any other way leads to material-less inefficacy — unless they are poltergeists, of course!

Let us examine the process of walking in detail. Now walking requires an interaction with the floor and such interactions are explained by Newton’s Laws of Motion.

blah, blah, blah ...

Thus the ghost has an affect on the physical universe. If this is so, then we can detect the ghost via physical observation. That is, the depiction of ghosts walking, contradicts the precept that ghosts are material-less.

So which is it? Are ghosts material or material-less? Maybe they are only material when it comes to walking.

He's basing his entire calculation on the assumption that every vampire creates a new vampire every time it feeds. I think Dr. Efthimiou needs to go back to the source. The only way to create a new vampire is to drink the blood of the Prince of Darkness himself. Dracula is like the queen bee: the only member of the hive allowed to reproduce. So instead of a geometric progression, you get an arithmetic progression up until the Count decides the vampire population is just right for him, then it plateaus. Once again, math that a child would undersand.To disprove the existence of vampires, Efthimiou relied on a basic math principle known as geometric progression.

Efthimiou supposed that the first vampire arrived Jan. 1, 1600, when the human population was 536,870,911. Assuming that the vampire fed once a month and the victim turned into a vampire, there would be two vampires and 536,870,910 humans on Feb. 1. There would be four vampires on March 1 and eight on April 1. If this trend continued, all of the original humans would become vampires within two and a half years and the vampires' food source would disappear.

There exists a second sort of zombie legend which pops its head up throughout the western hemisphere — the legend of ‘voodoo zombiefication’. This myth is somewhat different from the one just described in that zombies do not multiply by feeding on humans but come about by a voodoo hex being placed by a sorcerer on one of his enemy. The myth presents an additional problem for us: one can witness for them self very convincing examples of zombiefication by traveling to Haiti or any number of other regions in the world where voodoo is practiced.